mirror of

https://github.com/sasjs/core.git

synced 2025-12-30 06:00:06 +00:00

Compare commits

15 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7f867e2a5c | ||

|

|

c6af6ce578 | ||

|

|

a1aac785c0 | ||

|

|

dbe8b0b1c3 | ||

|

|

2ee9a4cee4 | ||

|

|

3a7afdffb7 | ||

|

|

c78211aa1c | ||

|

|

76c49e96f2 | ||

|

|

984ea44f5d | ||

|

|

88f1222abd | ||

|

|

d88f028ee3 | ||

|

|

07d7c9df4b | ||

|

|

6765a1d025 | ||

|

|

952f28a872 | ||

|

|

8246b5a42c |

30

.github/vpn/config.ovpn

vendored

30

.github/vpn/config.ovpn

vendored

@@ -1,30 +0,0 @@

|

||||

cipher AES-256-CBC

|

||||

setenv FORWARD_COMPATIBLE 1

|

||||

client

|

||||

server-poll-timeout 4

|

||||

nobind

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 443 tcp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

dev tun

|

||||

dev-type tun

|

||||

ns-cert-type server

|

||||

setenv opt tls-version-min 1.0 or-highest

|

||||

reneg-sec 604800

|

||||

sndbuf 0

|

||||

rcvbuf 0

|

||||

# NOTE: LZO commands are pushed by the Access Server at connect time.

|

||||

# NOTE: The below line doesn't disable LZO.

|

||||

comp-lzo no

|

||||

verb 3

|

||||

setenv PUSH_PEER_INFO

|

||||

|

||||

ca ca.crt

|

||||

cert user.crt

|

||||

key user.key

|

||||

tls-auth tls.key 1

|

||||

25

.github/workflows/run-tests.yml

vendored

25

.github/workflows/run-tests.yml

vendored

@@ -21,31 +21,6 @@ jobs:

|

||||

with:

|

||||

node-version: ${{ matrix.node-version }}

|

||||

|

||||

- name: Write VPN Files

|

||||

run: |

|

||||

echo "$CA_CRT" > .github/vpn/ca.crt

|

||||

echo "$USER_CRT" > .github/vpn/user.crt

|

||||

echo "$USER_KEY" > .github/vpn/user.key

|

||||

echo "$TLS_KEY" > .github/vpn/tls.key

|

||||

shell: bash

|

||||

env:

|

||||

CA_CRT: ${{ secrets.CA_CRT}}

|

||||

USER_CRT: ${{ secrets.USER_CRT }}

|

||||

USER_KEY: ${{ secrets.USER_KEY }}

|

||||

TLS_KEY: ${{ secrets.TLS_KEY }}

|

||||

|

||||

- name: Install Open VPN

|

||||

run: |

|

||||

sudo apt install apt-transport-https

|

||||

sudo wget https://swupdate.openvpn.net/repos/openvpn-repo-pkg-key.pub

|

||||

sudo apt-key add openvpn-repo-pkg-key.pub

|

||||

sudo wget -O /etc/apt/sources.list.d/openvpn3.list https://swupdate.openvpn.net/community/openvpn3/repos/openvpn3-focal.list

|

||||

sudo apt update

|

||||

sudo apt install openvpn3

|

||||

|

||||

- name: Start Open VPN 3

|

||||

run: openvpn3 session-start --config .github/vpn/config.ovpn

|

||||

|

||||

- name: Install Doxygen

|

||||

run: sudo apt-get install doxygen

|

||||

|

||||

|

||||

@@ -237,6 +237,7 @@ If you find this library useful, please leave a [star](https://github.com/sasjs/

|

||||

|

||||

The following repositories are also worth checking out:

|

||||

|

||||

* [xieliaing/SAS](https://github.com/xieliaing/SAS)

|

||||

* [SASJedi/sas-macros](https://github.com/SASJedi/sas-macros)

|

||||

* [chris-swenson/sasmacros](https://github.com/chris-swenson/sasmacros)

|

||||

* [greg-wotton/sas-programs](https://github.com/greg-wootton/sas-programs)

|

||||

|

||||

266

all.sas

266

all.sas

@@ -4112,11 +4112,14 @@ proc sql;

|

||||

%mp_deleteconstraints(inds=work.constraints,outds=dropped,execute=YES)

|

||||

%mp_createconstraints(inds=work.constraints,outds=created,execute=YES)

|

||||

|

||||

@param inds= The input table containing the constraint info

|

||||

@param outds= a table containing the create statements (create_statement column)

|

||||

@param execute= `YES|NO` - default is NO. To actually create, use YES.

|

||||

@param inds= (work.mp_getconstraints) The input table containing the

|

||||

constraint info

|

||||

@param outds= (work.mp_createconstraints) A table containing the create

|

||||

statements (create_statement column)

|

||||

@param execute= (NO) To actually create, use YES.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

<h4> Related Files </h4>

|

||||

@li mp_getconstraints.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

@@ -4124,7 +4127,7 @@ proc sql;

|

||||

**/

|

||||

|

||||

%macro mp_createconstraints(inds=mp_getconstraints

|

||||

,outds=mp_createconstraints

|

||||

,outds=work.mp_createconstraints

|

||||

,execute=NO

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

@@ -4158,7 +4161,8 @@ data &outds;

|

||||

output;

|

||||

run;

|

||||

|

||||

%mend mp_createconstraints;/**

|

||||

%mend mp_createconstraints;

|

||||

/**

|

||||

@file mp_createwebservice.sas

|

||||

@brief Create a web service in SAS 9, Viya or SASjs Server

|

||||

@details This is actually a wrapper for mx_createwebservice.sas, remaining

|

||||

@@ -4450,6 +4454,58 @@ run;

|

||||

%end;

|

||||

%else %put &sysmacroname: &folder: is not a valid / accessible folder. ;

|

||||

%mend mp_deletefolder;/**

|

||||

@file mp_dictionary.sas

|

||||

@brief Creates a portal (libref) into the SQL Dictionary Views

|

||||

@details Provide a libref and the macro will create a series of views against

|

||||

each view in the special PROC SQL dictionary libref.

|

||||

|

||||

This is useful if you would like to visualise (navigate) the views in a SAS

|

||||

client such as Base SAS, Enterprise Guide, or Studio (or [Data Controller](

|

||||

https://datacontroller.io)).

|

||||

|

||||

It works by extracting the dictionary.dictionaries view into

|

||||

YOURLIB.dictionaries, then uses that to create a YOURLIB.{viewName} for every

|

||||

other dictionary.view, eg:

|

||||

|

||||

proc sql;

|

||||

create view YOURLIB.columns as select * from dictionary.columns;

|

||||

|

||||

Usage:

|

||||

|

||||

libname demo "/lib/directory";

|

||||

%mp_dictionary(lib=demo)

|

||||

|

||||

Or, to just create them in WORK:

|

||||

|

||||

%mp_dictionary()

|

||||

|

||||

If you'd just like to browse the dictionary data model, you can also check

|

||||

out [this article](https://rawsas.com/dictionary-of-dictionaries/).

|

||||

|

||||

|

||||

|

||||

@param lib= (WORK) The libref in which to create the views

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_dictionary.test.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_dictionary(lib=WORK)/*/STORE SOURCE*/;

|

||||

%local list i mem;

|

||||

proc sql noprint;

|

||||

create view &lib..dictionaries as select * from dictionary.dictionaries;

|

||||

select distinct memname into: list separated by ' ' from &lib..dictionaries;

|

||||

%do i=1 %to %sysfunc(countw(&list,%str( )));

|

||||

%let mem=%scan(&list,&i,%str( ));

|

||||

create view &lib..&mem as select * from dictionary.&mem;

|

||||

%end;

|

||||

quit;

|

||||

%mend mp_dictionary;

|

||||

/**

|

||||

@file

|

||||

@brief Returns all files and subdirectories within a specified parent

|

||||

@details When used with getattrs=NO, is not OS specific (uses dopen / dread).

|

||||

@@ -4478,6 +4534,9 @@ run;

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] showparent= (NO) By default, the initial parent directory is not

|

||||

part of the results. Set to YES to include it. For this record only,

|

||||

directory=filepath.

|

||||

@param [out] outds= (work.mp_dirlist) The output dataset to create

|

||||

@param [out] getattrs= (NO) If getattrs=YES then the doptname / foptname

|

||||

functions are used to scan all properties - any characters that are not

|

||||

@@ -4514,6 +4573,7 @@ run;

|

||||

, fref=0

|

||||

, outds=work.mp_dirlist

|

||||

, getattrs=NO

|

||||

, showparent=NO

|

||||

, maxdepth=0

|

||||

, level=0 /* The level of recursion to perform. For internal use only. */

|

||||

)/*/STORE SOURCE*/;

|

||||

@@ -4596,6 +4656,15 @@ data &out_ds(compress=no

|

||||

output;

|

||||

end;

|

||||

rc = dclose(did);

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

filepath=directory;

|

||||

file_or_folder='folder';

|

||||

ext='';

|

||||

filename=scan(directory,-1,'/\');

|

||||

msg='';

|

||||

level=&level;

|

||||

output;

|

||||

%end;

|

||||

stop;

|

||||

run;

|

||||

|

||||

@@ -4683,6 +4752,9 @@ run;

|

||||

data _null_;

|

||||

set &out_ds;

|

||||

where file_or_folder='folder';

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

if filepath ne directory;

|

||||

%end;

|

||||

length code $10000;

|

||||

code=cats('%nrstr(%mp_dirlist(path=',filepath,",outds=&outds"

|

||||

,",getattrs=&getattrs,level=%eval(&level+1),maxdepth=&maxdepth))");

|

||||

@@ -5698,7 +5770,7 @@ data _null_;

|

||||

run;

|

||||

|

||||

%if %upcase(&showlog)=YES %then %do;

|

||||

options ps=max;

|

||||

options ps=max lrecl=max;

|

||||

data _null_;

|

||||

infile &outref;

|

||||

input;

|

||||

@@ -5706,7 +5778,8 @@ run;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_ds2md;/**

|

||||

%mend mp_ds2md;

|

||||

/**

|

||||

@file

|

||||

@brief Create a smaller version of a dataset, without data loss

|

||||

@details This macro will scan the input dataset and create a new one, that

|

||||

@@ -8497,7 +8570,7 @@ run;

|

||||

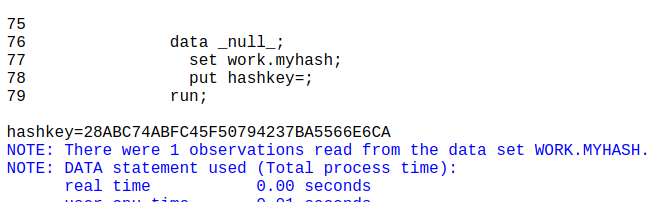

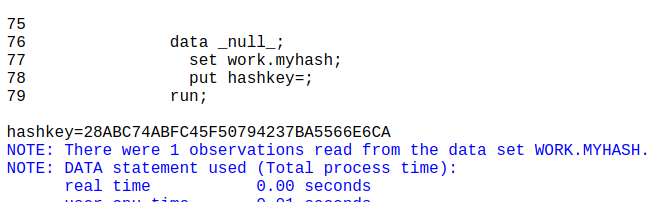

put hashkey=;

|

||||

run;

|

||||

|

||||

|

||||

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getattrn.sas

|

||||

@@ -8507,11 +8580,12 @@ run;

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.test.sas

|

||||

@li mp_hashdirectory.sas

|

||||

|

||||

@param [in] libds dataset to hash

|

||||

@param [in] salt= Provide a salt (could be, for instance, the dataset name)

|

||||

@param [in] iftrue= A condition under which the macro should be executed.

|

||||

@param [out] outds= (work.mf_hashdataset) The output dataset to create. This

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [out] outds= (work._data_) The output dataset to create. This

|

||||

will contain one column (hashkey) with one observation (a $hex32.

|

||||

representation of the input hash)

|

||||

|hashkey:$32.|

|

||||

@@ -8574,6 +8648,168 @@ run;

|

||||

run;

|

||||

%end;

|

||||

%mend mp_hashdataset;

|

||||

/**

|

||||

@file

|

||||

@brief Returns a unique hash for each file in a directory

|

||||

@details Hashes each file in each directory, and then hashes the hashes to

|

||||

create a hash for each directory also.

|

||||

|

||||

This makes use of the new `hashing_file()` and `hashing` functions, available

|

||||

since 9.4m6. Interestingly, these can even be used in pure macro, eg:

|

||||

|

||||

%put %sysfunc(hashing_file(md5,/path/to/file.blob,0));

|

||||

|

||||

Actual usage:

|

||||

|

||||

%let fpath=/some/directory;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=myhash,maxdepth=2)

|

||||

|

||||

data _null_;

|

||||

set work.myhash;

|

||||

put (_all_)(=);

|

||||

run;

|

||||

|

||||

Whilst files are hashed in their entirety, the logic for creating a folder

|

||||

hash is as follows:

|

||||

|

||||

@li Sort the files by filename (case sensitive, uppercase then lower)

|

||||

@li Take the first 100 hashes, concatenate and hash

|

||||

@li Concatenate this hash with another 100 hashes and hash again

|

||||

@li Continue until the end of the folder. This is the folder hash

|

||||

@li If a folder contains other folders, start from the bottom of the tree -

|

||||

the folder hashes cascade upwards so you know immediately if there is a

|

||||

change in a sub/sub directory

|

||||

@li If the folder has no content (empty) then it is ignored. No hash created.

|

||||

@li If the file is empty, it is also ignored / no hash created.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dirlist.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.sas

|

||||

@li mp_hashdirectory.test.sas

|

||||

@li mp_md5.sas

|

||||

|

||||

@param [in] inloc Full filepath of the file to be hashed (unquoted)

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] method= (MD5) the hashing method to use. Available options:

|

||||

@li MD5

|

||||

@li SH1

|

||||

@li SHA256

|

||||

@li SHA384

|

||||

@li SHA512

|

||||

@li CRC32

|

||||

@param [out] outds= (work.mp_hashdirectory) The output dataset. Contains:

|

||||

@li directory - the parent folder

|

||||

@li file_hash - the hash output

|

||||

@li hash_duration - how long the hash took (first hash always takes longer)

|

||||

@li file_path - /full/path/to/each/file.ext

|

||||

@li file_or_folder - contains either "file" or "folder"

|

||||

@li level - the depth of the directory (top level is 0)

|

||||

|

||||

@version 9.4m6

|

||||

@author Allan Bowe

|

||||

**/

|

||||

|

||||

%macro mp_hashdirectory(inloc,

|

||||

outds=work.mp_hashdirectory,

|

||||

method=MD5,

|

||||

maxdepth=0,

|

||||

iftrue=%str(1=1)

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

%local curlevel tempds ;

|

||||

|

||||

%if not(%eval(%unquote(&iftrue))) %then %return;

|

||||

|

||||

/* get the directory listing */

|

||||

%mp_dirlist(path=&inloc, outds=&outds, maxdepth=&maxdepth, showparent=YES)

|

||||

|

||||

/* create the hashes */

|

||||

data &outds;

|

||||

set &outds (rename=(filepath=file_path));

|

||||

length FILE_HASH $32 HASH_DURATION 8;

|

||||

keep directory file_hash hash_duration file_path file_or_folder level;

|

||||

|

||||

ts=datetime();

|

||||

if file_or_folder='file' then do;

|

||||

/* if file is empty, hashing_file will break - so ignore / delete */

|

||||

length fname val $8;

|

||||

drop fname val fid is_empty;

|

||||

rc=filename(fname,file_path);

|

||||

fid=fopen(fname);

|

||||

if fid > 0 then do;

|

||||

rc=fread(fid);

|

||||

is_empty=fget(fid,val);

|

||||

end;

|

||||

rc=fclose(fid);

|

||||

rc=filename(fname);

|

||||

if is_empty ne 0 then delete;

|

||||

else file_hash=hashing_file("&method",cats(file_path),0);

|

||||

end;

|

||||

hash_duration=datetime()-ts;

|

||||

run;

|

||||

|

||||

proc sort data=&outds ;

|

||||

by descending level directory file_path;

|

||||

run;

|

||||

|

||||

data _null_;

|

||||

set &outds;

|

||||

call symputx('maxlevel',level,'l');

|

||||

stop;

|

||||

run;

|

||||

|

||||

/* now hash the hashes to populate folder hashes, starting from the bottom */

|

||||

%do curlevel=&maxlevel %to 0 %by -1;

|

||||

data work._data_ (keep=directory file_hash);

|

||||

set &outds;

|

||||

where level=&curlevel;

|

||||

by descending level directory file_path;

|

||||

length str $32767 tmp_hash $32;

|

||||

retain str tmp_hash ;

|

||||

/* reset vars when starting a new directory */

|

||||

if first.directory then do;

|

||||

str='';

|

||||

tmp_hash='';

|

||||

i=0;

|

||||

end;

|

||||

/* hash each chunk of 100 file paths */

|

||||

i+1;

|

||||

str=cats(str,file_hash);

|

||||

if mod(i,100)=0 or last.directory then do;

|

||||

tmp_hash=hashing("&method",cats(tmp_hash,str));

|

||||

str='';

|

||||

end;

|

||||

/* output the hash at directory level */

|

||||

if last.directory then do;

|

||||

file_hash=tmp_hash;

|

||||

output;

|

||||

end;

|

||||

if last.level then stop;

|

||||

run;

|

||||

%let tempds=&syslast;

|

||||

/* join the hash back into the main table */

|

||||

proc sql undo_policy=none;

|

||||

create table &outds as

|

||||

select a.directory

|

||||

,coalesce(b.file_hash,a.file_hash) as file_hash

|

||||

,a.hash_duration

|

||||

,a.file_path

|

||||

,a.file_or_folder

|

||||

,a.level

|

||||

from &outds a

|

||||

left join &tempds b

|

||||

on a.file_path=b.directory

|

||||

order by level desc, directory, file_path;

|

||||

drop table &tempds;

|

||||

%end;

|

||||

|

||||

%mend mp_hashdirectory;

|

||||

/**

|

||||

@file

|

||||

@brief Performs a wrapped \%include

|

||||

@@ -21959,7 +22195,8 @@ run;

|

||||

@param [in] contentdisp= (inline) Content Disposition. Example values:

|

||||

@li inline

|

||||

@li attachment

|

||||

|

||||

@param [in] ctype= (0) Set a default HTTP Content-Type header to be returned

|

||||

with the file when the content is retrieved from the Files service.

|

||||

@param [in] access_token_var= The global macro variable to contain the access

|

||||

token, if using authorization_code grant type.

|

||||

@param [in] grant_type= (sas_services) Valid values are:

|

||||

@@ -21987,6 +22224,7 @@ run;

|

||||

,inref=

|

||||

,intype=BINARY

|

||||

,contentdisp=inline

|

||||

,ctype=0

|

||||

,access_token_var=ACCESS_TOKEN

|

||||

,grant_type=sas_services

|

||||

,mdebug=0

|

||||

@@ -22038,8 +22276,10 @@ filename &fref filesrvc

|

||||

folderPath="&path"

|

||||

filename="&name"

|

||||

cdisp="&contentdisp"

|

||||

%if "&ctype" ne "0" %then %do;

|

||||

ctype="&ctype"

|

||||

%end;

|

||||

lrecl=1048544;

|

||||

|

||||

%if &intype=BINARY %then %do;

|

||||

%mp_binarycopy(inref=&inref, outref=&fref)

|

||||

%end;

|

||||

|

||||

@@ -18,11 +18,14 @@

|

||||

%mp_deleteconstraints(inds=work.constraints,outds=dropped,execute=YES)

|

||||

%mp_createconstraints(inds=work.constraints,outds=created,execute=YES)

|

||||

|

||||

@param inds= The input table containing the constraint info

|

||||

@param outds= a table containing the create statements (create_statement column)

|

||||

@param execute= `YES|NO` - default is NO. To actually create, use YES.

|

||||

@param inds= (work.mp_getconstraints) The input table containing the

|

||||

constraint info

|

||||

@param outds= (work.mp_createconstraints) A table containing the create

|

||||

statements (create_statement column)

|

||||

@param execute= (NO) To actually create, use YES.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

<h4> Related Files </h4>

|

||||

@li mp_getconstraints.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

@@ -30,7 +33,7 @@

|

||||

**/

|

||||

|

||||

%macro mp_createconstraints(inds=mp_getconstraints

|

||||

,outds=mp_createconstraints

|

||||

,outds=work.mp_createconstraints

|

||||

,execute=NO

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

@@ -64,4 +67,4 @@ data &outds;

|

||||

output;

|

||||

run;

|

||||

|

||||

%mend mp_createconstraints;

|

||||

%mend mp_createconstraints;

|

||||

|

||||

52

base/mp_dictionary.sas

Normal file

52

base/mp_dictionary.sas

Normal file

@@ -0,0 +1,52 @@

|

||||

/**

|

||||

@file mp_dictionary.sas

|

||||

@brief Creates a portal (libref) into the SQL Dictionary Views

|

||||

@details Provide a libref and the macro will create a series of views against

|

||||

each view in the special PROC SQL dictionary libref.

|

||||

|

||||

This is useful if you would like to visualise (navigate) the views in a SAS

|

||||

client such as Base SAS, Enterprise Guide, or Studio (or [Data Controller](

|

||||

https://datacontroller.io)).

|

||||

|

||||

It works by extracting the dictionary.dictionaries view into

|

||||

YOURLIB.dictionaries, then uses that to create a YOURLIB.{viewName} for every

|

||||

other dictionary.view, eg:

|

||||

|

||||

proc sql;

|

||||

create view YOURLIB.columns as select * from dictionary.columns;

|

||||

|

||||

Usage:

|

||||

|

||||

libname demo "/lib/directory";

|

||||

%mp_dictionary(lib=demo)

|

||||

|

||||

Or, to just create them in WORK:

|

||||

|

||||

%mp_dictionary()

|

||||

|

||||

If you'd just like to browse the dictionary data model, you can also check

|

||||

out [this article](https://rawsas.com/dictionary-of-dictionaries/).

|

||||

|

||||

|

||||

|

||||

@param lib= (WORK) The libref in which to create the views

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_dictionary.test.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_dictionary(lib=WORK)/*/STORE SOURCE*/;

|

||||

%local list i mem;

|

||||

proc sql noprint;

|

||||

create view &lib..dictionaries as select * from dictionary.dictionaries;

|

||||

select distinct memname into: list separated by ' ' from &lib..dictionaries;

|

||||

%do i=1 %to %sysfunc(countw(&list,%str( )));

|

||||

%let mem=%scan(&list,&i,%str( ));

|

||||

create view &lib..&mem as select * from dictionary.&mem;

|

||||

%end;

|

||||

quit;

|

||||

%mend mp_dictionary;

|

||||

@@ -27,6 +27,9 @@

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] showparent= (NO) By default, the initial parent directory is not

|

||||

part of the results. Set to YES to include it. For this record only,

|

||||

directory=filepath.

|

||||

@param [out] outds= (work.mp_dirlist) The output dataset to create

|

||||

@param [out] getattrs= (NO) If getattrs=YES then the doptname / foptname

|

||||

functions are used to scan all properties - any characters that are not

|

||||

@@ -63,6 +66,7 @@

|

||||

, fref=0

|

||||

, outds=work.mp_dirlist

|

||||

, getattrs=NO

|

||||

, showparent=NO

|

||||

, maxdepth=0

|

||||

, level=0 /* The level of recursion to perform. For internal use only. */

|

||||

)/*/STORE SOURCE*/;

|

||||

@@ -145,6 +149,15 @@ data &out_ds(compress=no

|

||||

output;

|

||||

end;

|

||||

rc = dclose(did);

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

filepath=directory;

|

||||

file_or_folder='folder';

|

||||

ext='';

|

||||

filename=scan(directory,-1,'/\');

|

||||

msg='';

|

||||

level=&level;

|

||||

output;

|

||||

%end;

|

||||

stop;

|

||||

run;

|

||||

|

||||

@@ -232,6 +245,9 @@ run;

|

||||

data _null_;

|

||||

set &out_ds;

|

||||

where file_or_folder='folder';

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

if filepath ne directory;

|

||||

%end;

|

||||

length code $10000;

|

||||

code=cats('%nrstr(%mp_dirlist(path=',filepath,",outds=&outds"

|

||||

,",getattrs=&getattrs,level=%eval(&level+1),maxdepth=&maxdepth))");

|

||||

|

||||

@@ -92,7 +92,7 @@ data _null_;

|

||||

run;

|

||||

|

||||

%if %upcase(&showlog)=YES %then %do;

|

||||

options ps=max;

|

||||

options ps=max lrecl=max;

|

||||

data _null_;

|

||||

infile &outref;

|

||||

input;

|

||||

@@ -100,4 +100,4 @@ run;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_ds2md;

|

||||

%mend mp_ds2md;

|

||||

|

||||

@@ -11,7 +11,7 @@

|

||||

put hashkey=;

|

||||

run;

|

||||

|

||||

|

||||

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getattrn.sas

|

||||

@@ -21,11 +21,12 @@

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.test.sas

|

||||

@li mp_hashdirectory.sas

|

||||

|

||||

@param [in] libds dataset to hash

|

||||

@param [in] salt= Provide a salt (could be, for instance, the dataset name)

|

||||

@param [in] iftrue= A condition under which the macro should be executed.

|

||||

@param [out] outds= (work.mf_hashdataset) The output dataset to create. This

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [out] outds= (work._data_) The output dataset to create. This

|

||||

will contain one column (hashkey) with one observation (a $hex32.

|

||||

representation of the input hash)

|

||||

|hashkey:$32.|

|

||||

|

||||

162

base/mp_hashdirectory.sas

Normal file

162

base/mp_hashdirectory.sas

Normal file

@@ -0,0 +1,162 @@

|

||||

/**

|

||||

@file

|

||||

@brief Returns a unique hash for each file in a directory

|

||||

@details Hashes each file in each directory, and then hashes the hashes to

|

||||

create a hash for each directory also.

|

||||

|

||||

This makes use of the new `hashing_file()` and `hashing` functions, available

|

||||

since 9.4m6. Interestingly, these can even be used in pure macro, eg:

|

||||

|

||||

%put %sysfunc(hashing_file(md5,/path/to/file.blob,0));

|

||||

|

||||

Actual usage:

|

||||

|

||||

%let fpath=/some/directory;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=myhash,maxdepth=2)

|

||||

|

||||

data _null_;

|

||||

set work.myhash;

|

||||

put (_all_)(=);

|

||||

run;

|

||||

|

||||

Whilst files are hashed in their entirety, the logic for creating a folder

|

||||

hash is as follows:

|

||||

|

||||

@li Sort the files by filename (case sensitive, uppercase then lower)

|

||||

@li Take the first 100 hashes, concatenate and hash

|

||||

@li Concatenate this hash with another 100 hashes and hash again

|

||||

@li Continue until the end of the folder. This is the folder hash

|

||||

@li If a folder contains other folders, start from the bottom of the tree -

|

||||

the folder hashes cascade upwards so you know immediately if there is a

|

||||

change in a sub/sub directory

|

||||

@li If the folder has no content (empty) then it is ignored. No hash created.

|

||||

@li If the file is empty, it is also ignored / no hash created.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dirlist.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.sas

|

||||

@li mp_hashdirectory.test.sas

|

||||

@li mp_md5.sas

|

||||

|

||||

@param [in] inloc Full filepath of the file to be hashed (unquoted)

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] method= (MD5) the hashing method to use. Available options:

|

||||

@li MD5

|

||||

@li SH1

|

||||

@li SHA256

|

||||

@li SHA384

|

||||

@li SHA512

|

||||

@li CRC32

|

||||

@param [out] outds= (work.mp_hashdirectory) The output dataset. Contains:

|

||||

@li directory - the parent folder

|

||||

@li file_hash - the hash output

|

||||

@li hash_duration - how long the hash took (first hash always takes longer)

|

||||

@li file_path - /full/path/to/each/file.ext

|

||||

@li file_or_folder - contains either "file" or "folder"

|

||||

@li level - the depth of the directory (top level is 0)

|

||||

|

||||

@version 9.4m6

|

||||

@author Allan Bowe

|

||||

**/

|

||||

|

||||

%macro mp_hashdirectory(inloc,

|

||||

outds=work.mp_hashdirectory,

|

||||

method=MD5,

|

||||

maxdepth=0,

|

||||

iftrue=%str(1=1)

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

%local curlevel tempds ;

|

||||

|

||||

%if not(%eval(%unquote(&iftrue))) %then %return;

|

||||

|

||||

/* get the directory listing */

|

||||

%mp_dirlist(path=&inloc, outds=&outds, maxdepth=&maxdepth, showparent=YES)

|

||||

|

||||

/* create the hashes */

|

||||

data &outds;

|

||||

set &outds (rename=(filepath=file_path));

|

||||

length FILE_HASH $32 HASH_DURATION 8;

|

||||

keep directory file_hash hash_duration file_path file_or_folder level;

|

||||

|

||||

ts=datetime();

|

||||

if file_or_folder='file' then do;

|

||||

/* if file is empty, hashing_file will break - so ignore / delete */

|

||||

length fname val $8;

|

||||

drop fname val fid is_empty;

|

||||

rc=filename(fname,file_path);

|

||||

fid=fopen(fname);

|

||||

if fid > 0 then do;

|

||||

rc=fread(fid);

|

||||

is_empty=fget(fid,val);

|

||||

end;

|

||||

rc=fclose(fid);

|

||||

rc=filename(fname);

|

||||

if is_empty ne 0 then delete;

|

||||

else file_hash=hashing_file("&method",cats(file_path),0);

|

||||

end;

|

||||

hash_duration=datetime()-ts;

|

||||

run;

|

||||

|

||||

proc sort data=&outds ;

|

||||

by descending level directory file_path;

|

||||

run;

|

||||

|

||||

data _null_;

|

||||

set &outds;

|

||||

call symputx('maxlevel',level,'l');

|

||||

stop;

|

||||

run;

|

||||

|

||||

/* now hash the hashes to populate folder hashes, starting from the bottom */

|

||||

%do curlevel=&maxlevel %to 0 %by -1;

|

||||

data work._data_ (keep=directory file_hash);

|

||||

set &outds;

|

||||

where level=&curlevel;

|

||||

by descending level directory file_path;

|

||||

length str $32767 tmp_hash $32;

|

||||

retain str tmp_hash ;

|

||||

/* reset vars when starting a new directory */

|

||||

if first.directory then do;

|

||||

str='';

|

||||

tmp_hash='';

|

||||

i=0;

|

||||

end;

|

||||

/* hash each chunk of 100 file paths */

|

||||

i+1;

|

||||

str=cats(str,file_hash);

|

||||

if mod(i,100)=0 or last.directory then do;

|

||||

tmp_hash=hashing("&method",cats(tmp_hash,str));

|

||||

str='';

|

||||

end;

|

||||

/* output the hash at directory level */

|

||||

if last.directory then do;

|

||||

file_hash=tmp_hash;

|

||||

output;

|

||||

end;

|

||||

if last.level then stop;

|

||||

run;

|

||||

%let tempds=&syslast;

|

||||

/* join the hash back into the main table */

|

||||

proc sql undo_policy=none;

|

||||

create table &outds as

|

||||

select a.directory

|

||||

,coalesce(b.file_hash,a.file_hash) as file_hash

|

||||

,a.hash_duration

|

||||

,a.file_path

|

||||

,a.file_or_folder

|

||||

,a.level

|

||||

from &outds a

|

||||

left join &tempds b

|

||||

on a.file_path=b.directory

|

||||

order by level desc, directory, file_path;

|

||||

drop table &tempds;

|

||||

%end;

|

||||

|

||||

%mend mp_hashdirectory;

|

||||

26

tests/base/mp_dictionary.test.sas

Normal file

26

tests/base/mp_dictionary.test.sas

Normal file

@@ -0,0 +1,26 @@

|

||||

/**

|

||||

@file

|

||||

@brief Testing mp_dictionary.sas macro

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dictionary.sas

|

||||

@li mp_assert.sas

|

||||

|

||||

**/

|

||||

|

||||

libname test (work);

|

||||

%mp_dictionary(lib=test)

|

||||

|

||||

proc sql;

|

||||

create table work.compare1 as select * from test.styles;

|

||||

create table work.compare2 as select * from dictionary.styles;

|

||||

|

||||

proc compare base=compare1 compare=compare2;

|

||||

run;

|

||||

%put _all_;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(%mf_existds(&sysinfo)=0),

|

||||

desc=Compare was exact,

|

||||

outds=work.test_results

|

||||

)

|

||||

133

tests/base/mp_hashdirectory.test.sas

Normal file

133

tests/base/mp_hashdirectory.test.sas

Normal file

@@ -0,0 +1,133 @@

|

||||

/**

|

||||

@file

|

||||

@brief Testing mp_hashdirectory.sas macro

|

||||

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_mkdir.sas

|

||||

@li mf_nobs.sas

|

||||

@li mp_assert.sas

|

||||

@li mp_assertscope.sas

|

||||

@li mp_hashdirectory.sas

|

||||

|

||||

**/

|

||||

|

||||

/* set up a directory to hash */

|

||||

%let fpath=%sysfunc(pathname(work))/testdir;

|

||||

|

||||

%mf_mkdir(&fpath)

|

||||

%mf_mkdir(&fpath/sub1)

|

||||

%mf_mkdir(&fpath/sub2)

|

||||

%mf_mkdir(&fpath/sub1/subsub)

|

||||

|

||||

/* note - the path in the file means the hash is different in each run */

|

||||

%macro makefile(path,name);

|

||||

data _null_;

|

||||

file "&path/&name" termstr=lf;

|

||||

put "This file is located at:";

|

||||

put "&path";

|

||||

put "and it is called:";

|

||||

put "&name";

|

||||

run;

|

||||

%mend makefile;

|

||||

|

||||

%macro spawner(path);

|

||||

%do x=1 %to 5;

|

||||

%makefile(&path,file&x..txt)

|

||||

%end;

|

||||

%mend spawner;

|

||||

|

||||

%spawner(&fpath)

|

||||

%spawner(&fpath/sub1)

|

||||

%spawner(&fpath/sub1/subsub)

|

||||

|

||||

|

||||

%mp_assertscope(SNAPSHOT)

|

||||

%mp_hashdirectory(&fpath,outds=work.hashes,maxdepth=MAX)

|

||||

%mp_assertscope(COMPARE)

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(&syscc=0),

|

||||

desc=No errors,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(%mf_nobs(work.hashes)=19),

|

||||

desc=record created for each entry,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

proc sql;

|

||||

select count(*) into: misscheck

|

||||

from work.hashes

|

||||

where file_hash is missing;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(&misscheck=1),

|

||||

desc=Only one missing hash - the empty directory,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

data _null_;

|

||||

set work.hashes;

|

||||

if directory=file_path then call symputx('tophash',file_hash);

|

||||

run;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(%length(&tophash)=32),

|

||||

desc=ensure valid top level hash created,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

/* now change a file and re-hash */

|

||||

data _null_;

|

||||

file "&fpath/sub1/subsub/file1.txt" termstr=lf;

|

||||

put "This file has changed!";

|

||||

run;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=work.hashes2,maxdepth=MAX)

|

||||

|

||||

data _null_;

|

||||

set work.hashes2;

|

||||

if directory=file_path then call symputx('tophash2',file_hash);

|

||||

run;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(&tophash ne &tophash2),

|

||||

desc=ensure the changing of the hash results in a new value,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

/* now change it back and see if it matches */

|

||||

data _null_;

|

||||

file "&fpath/sub1/subsub/file1.txt" termstr=lf;

|

||||

put "This file is located at:";

|

||||

put "&fpath/sub1/subsub";

|

||||

put "and it is called:";

|

||||

put "file1.txt";

|

||||

run;

|

||||

run;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=work.hashes3,maxdepth=MAX)

|

||||

|

||||

data _null_;

|

||||

set work.hashes3;

|

||||

if directory=file_path then call symputx('tophash3',file_hash);

|

||||

run;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(&tophash=&tophash3),

|

||||

desc=ensure the same files result in the same hash,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

/* dump contents for debugging */

|

||||

data _null_;

|

||||

set work.hashes;

|

||||

put file_hash file_path;

|

||||

run;

|

||||

data _null_;

|

||||

set work.hashes2;

|

||||

put file_hash file_path;

|

||||

run;

|

||||

@@ -24,7 +24,8 @@

|

||||

@param [in] contentdisp= (inline) Content Disposition. Example values:

|

||||

@li inline

|

||||

@li attachment

|

||||

|

||||

@param [in] ctype= (0) Set a default HTTP Content-Type header to be returned

|

||||

with the file when the content is retrieved from the Files service.

|

||||

@param [in] access_token_var= The global macro variable to contain the access

|

||||

token, if using authorization_code grant type.

|

||||

@param [in] grant_type= (sas_services) Valid values are:

|

||||

@@ -52,6 +53,7 @@

|

||||

,inref=

|

||||

,intype=BINARY

|

||||

,contentdisp=inline

|

||||

,ctype=0

|

||||

,access_token_var=ACCESS_TOKEN

|

||||

,grant_type=sas_services

|

||||

,mdebug=0

|

||||

@@ -103,8 +105,10 @@ filename &fref filesrvc

|

||||

folderPath="&path"

|

||||

filename="&name"

|

||||

cdisp="&contentdisp"

|

||||

%if "&ctype" ne "0" %then %do;

|

||||

ctype="&ctype"

|

||||

%end;

|

||||

lrecl=1048544;

|

||||

|

||||

%if &intype=BINARY %then %do;

|

||||

%mp_binarycopy(inref=&inref, outref=&fref)

|

||||

%end;

|

||||

|

||||

Reference in New Issue

Block a user