mirror of

https://github.com/sasjs/core.git

synced 2025-12-24 03:31:19 +00:00

Compare commits

21 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f832e93f4b | ||

|

|

f37c2e5867 | ||

|

|

6f8ec5d5a8 | ||

|

|

6521ade608 | ||

|

|

2666bbc85e | ||

|

|

ee35f47f4f | ||

|

|

7f867e2a5c | ||

|

|

c6af6ce578 | ||

|

|

a1aac785c0 | ||

|

|

dbe8b0b1c3 | ||

|

|

2ee9a4cee4 | ||

|

|

3a7afdffb7 | ||

|

|

c78211aa1c | ||

|

|

76c49e96f2 | ||

|

|

984ea44f5d | ||

|

|

88f1222abd | ||

|

|

d88f028ee3 | ||

|

|

07d7c9df4b | ||

|

|

6765a1d025 | ||

|

|

952f28a872 | ||

|

|

8246b5a42c |

30

.github/vpn/config.ovpn

vendored

30

.github/vpn/config.ovpn

vendored

@@ -1,30 +0,0 @@

|

||||

cipher AES-256-CBC

|

||||

setenv FORWARD_COMPATIBLE 1

|

||||

client

|

||||

server-poll-timeout 4

|

||||

nobind

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 443 tcp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

remote vpn.analytium.co.uk 1194 udp

|

||||

dev tun

|

||||

dev-type tun

|

||||

ns-cert-type server

|

||||

setenv opt tls-version-min 1.0 or-highest

|

||||

reneg-sec 604800

|

||||

sndbuf 0

|

||||

rcvbuf 0

|

||||

# NOTE: LZO commands are pushed by the Access Server at connect time.

|

||||

# NOTE: The below line doesn't disable LZO.

|

||||

comp-lzo no

|

||||

verb 3

|

||||

setenv PUSH_PEER_INFO

|

||||

|

||||

ca ca.crt

|

||||

cert user.crt

|

||||

key user.key

|

||||

tls-auth tls.key 1

|

||||

7

.github/workflows/main.yml

vendored

7

.github/workflows/main.yml

vendored

@@ -19,3 +19,10 @@ jobs:

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GH_TOKEN }}

|

||||

NPM_TOKEN: ${{ secrets.NPM_TOKEN }}

|

||||

- name: SAS Packages Release

|

||||

run: |

|

||||

sasjs compile job -s sasjs/utils/create_sas_package.sas -o sasjsbuild/makepak.sas

|

||||

# this part depends on https://github.com/sasjs/server/issues/307

|

||||

# sasjs run sasjsbuild/makepak.sas -t sas9

|

||||

|

||||

|

||||

|

||||

25

.github/workflows/run-tests.yml

vendored

25

.github/workflows/run-tests.yml

vendored

@@ -21,31 +21,6 @@ jobs:

|

||||

with:

|

||||

node-version: ${{ matrix.node-version }}

|

||||

|

||||

- name: Write VPN Files

|

||||

run: |

|

||||

echo "$CA_CRT" > .github/vpn/ca.crt

|

||||

echo "$USER_CRT" > .github/vpn/user.crt

|

||||

echo "$USER_KEY" > .github/vpn/user.key

|

||||

echo "$TLS_KEY" > .github/vpn/tls.key

|

||||

shell: bash

|

||||

env:

|

||||

CA_CRT: ${{ secrets.CA_CRT}}

|

||||

USER_CRT: ${{ secrets.USER_CRT }}

|

||||

USER_KEY: ${{ secrets.USER_KEY }}

|

||||

TLS_KEY: ${{ secrets.TLS_KEY }}

|

||||

|

||||

- name: Install Open VPN

|

||||

run: |

|

||||

sudo apt install apt-transport-https

|

||||

sudo wget https://swupdate.openvpn.net/repos/openvpn-repo-pkg-key.pub

|

||||

sudo apt-key add openvpn-repo-pkg-key.pub

|

||||

sudo wget -O /etc/apt/sources.list.d/openvpn3.list https://swupdate.openvpn.net/community/openvpn3/repos/openvpn3-focal.list

|

||||

sudo apt update

|

||||

sudo apt install openvpn3

|

||||

|

||||

- name: Start Open VPN 3

|

||||

run: openvpn3 session-start --config .github/vpn/config.ovpn

|

||||

|

||||

- name: Install Doxygen

|

||||

run: sudo apt-get install doxygen

|

||||

|

||||

|

||||

@@ -237,6 +237,7 @@ If you find this library useful, please leave a [star](https://github.com/sasjs/

|

||||

|

||||

The following repositories are also worth checking out:

|

||||

|

||||

* [xieliaing/SAS](https://github.com/xieliaing/SAS)

|

||||

* [SASJedi/sas-macros](https://github.com/SASJedi/sas-macros)

|

||||

* [chris-swenson/sasmacros](https://github.com/chris-swenson/sasmacros)

|

||||

* [greg-wotton/sas-programs](https://github.com/greg-wootton/sas-programs)

|

||||

|

||||

400

all.sas

400

all.sas

@@ -4112,11 +4112,14 @@ proc sql;

|

||||

%mp_deleteconstraints(inds=work.constraints,outds=dropped,execute=YES)

|

||||

%mp_createconstraints(inds=work.constraints,outds=created,execute=YES)

|

||||

|

||||

@param inds= The input table containing the constraint info

|

||||

@param outds= a table containing the create statements (create_statement column)

|

||||

@param execute= `YES|NO` - default is NO. To actually create, use YES.

|

||||

@param inds= (work.mp_getconstraints) The input table containing the

|

||||

constraint info

|

||||

@param outds= (work.mp_createconstraints) A table containing the create

|

||||

statements (create_statement column)

|

||||

@param execute= (NO) To actually create, use YES.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

<h4> Related Files </h4>

|

||||

@li mp_getconstraints.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

@@ -4124,7 +4127,7 @@ proc sql;

|

||||

**/

|

||||

|

||||

%macro mp_createconstraints(inds=mp_getconstraints

|

||||

,outds=mp_createconstraints

|

||||

,outds=work.mp_createconstraints

|

||||

,execute=NO

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

@@ -4158,7 +4161,8 @@ data &outds;

|

||||

output;

|

||||

run;

|

||||

|

||||

%mend mp_createconstraints;/**

|

||||

%mend mp_createconstraints;

|

||||

/**

|

||||

@file mp_createwebservice.sas

|

||||

@brief Create a web service in SAS 9, Viya or SASjs Server

|

||||

@details This is actually a wrapper for mx_createwebservice.sas, remaining

|

||||

@@ -4450,6 +4454,58 @@ run;

|

||||

%end;

|

||||

%else %put &sysmacroname: &folder: is not a valid / accessible folder. ;

|

||||

%mend mp_deletefolder;/**

|

||||

@file mp_dictionary.sas

|

||||

@brief Creates a portal (libref) into the SQL Dictionary Views

|

||||

@details Provide a libref and the macro will create a series of views against

|

||||

each view in the special PROC SQL dictionary libref.

|

||||

|

||||

This is useful if you would like to visualise (navigate) the views in a SAS

|

||||

client such as Base SAS, Enterprise Guide, or Studio (or [Data Controller](

|

||||

https://datacontroller.io)).

|

||||

|

||||

It works by extracting the dictionary.dictionaries view into

|

||||

YOURLIB.dictionaries, then uses that to create a YOURLIB.{viewName} for every

|

||||

other dictionary.view, eg:

|

||||

|

||||

proc sql;

|

||||

create view YOURLIB.columns as select * from dictionary.columns;

|

||||

|

||||

Usage:

|

||||

|

||||

libname demo "/lib/directory";

|

||||

%mp_dictionary(lib=demo)

|

||||

|

||||

Or, to just create them in WORK:

|

||||

|

||||

%mp_dictionary()

|

||||

|

||||

If you'd just like to browse the dictionary data model, you can also check

|

||||

out [this article](https://rawsas.com/dictionary-of-dictionaries/).

|

||||

|

||||

|

||||

|

||||

@param lib= (WORK) The libref in which to create the views

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_dictionary.test.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_dictionary(lib=WORK)/*/STORE SOURCE*/;

|

||||

%local list i mem;

|

||||

proc sql noprint;

|

||||

create view &lib..dictionaries as select * from dictionary.dictionaries;

|

||||

select distinct memname into: list separated by ' ' from &lib..dictionaries;

|

||||

%do i=1 %to %sysfunc(countw(&list,%str( )));

|

||||

%let mem=%scan(&list,&i,%str( ));

|

||||

create view &lib..&mem as select * from dictionary.&mem;

|

||||

%end;

|

||||

quit;

|

||||

%mend mp_dictionary;

|

||||

/**

|

||||

@file

|

||||

@brief Returns all files and subdirectories within a specified parent

|

||||

@details When used with getattrs=NO, is not OS specific (uses dopen / dread).

|

||||

@@ -4478,6 +4534,9 @@ run;

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] showparent= (NO) By default, the initial parent directory is not

|

||||

part of the results. Set to YES to include it. For this record only,

|

||||

directory=filepath.

|

||||

@param [out] outds= (work.mp_dirlist) The output dataset to create

|

||||

@param [out] getattrs= (NO) If getattrs=YES then the doptname / foptname

|

||||

functions are used to scan all properties - any characters that are not

|

||||

@@ -4514,6 +4573,7 @@ run;

|

||||

, fref=0

|

||||

, outds=work.mp_dirlist

|

||||

, getattrs=NO

|

||||

, showparent=NO

|

||||

, maxdepth=0

|

||||

, level=0 /* The level of recursion to perform. For internal use only. */

|

||||

)/*/STORE SOURCE*/;

|

||||

@@ -4596,6 +4656,15 @@ data &out_ds(compress=no

|

||||

output;

|

||||

end;

|

||||

rc = dclose(did);

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

filepath=directory;

|

||||

file_or_folder='folder';

|

||||

ext='';

|

||||

filename=scan(directory,-1,'/\');

|

||||

msg='';

|

||||

level=&level;

|

||||

output;

|

||||

%end;

|

||||

stop;

|

||||

run;

|

||||

|

||||

@@ -4683,6 +4752,9 @@ run;

|

||||

data _null_;

|

||||

set &out_ds;

|

||||

where file_or_folder='folder';

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

if filepath ne directory;

|

||||

%end;

|

||||

length code $10000;

|

||||

code=cats('%nrstr(%mp_dirlist(path=',filepath,",outds=&outds"

|

||||

,",getattrs=&getattrs,level=%eval(&level+1),maxdepth=&maxdepth))");

|

||||

@@ -5698,7 +5770,7 @@ data _null_;

|

||||

run;

|

||||

|

||||

%if %upcase(&showlog)=YES %then %do;

|

||||

options ps=max;

|

||||

options ps=max lrecl=max;

|

||||

data _null_;

|

||||

infile &outref;

|

||||

input;

|

||||

@@ -5706,7 +5778,8 @@ run;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_ds2md;/**

|

||||

%mend mp_ds2md;

|

||||

/**

|

||||

@file

|

||||

@brief Create a smaller version of a dataset, without data loss

|

||||

@details This macro will scan the input dataset and create a new one, that

|

||||

@@ -8095,6 +8168,80 @@ create table &outds as

|

||||

)

|

||||

|

||||

%mend mp_getpk;

|

||||

/**

|

||||

@file

|

||||

@brief Pulls latest release info from a GIT repository

|

||||

@details Useful for grabbing the latest version number or other attributes

|

||||

from a GIT server. Supported providers are GitLab and GitHub. Pull requests

|

||||

are welcome if you'd like to see additional providers!

|

||||

|

||||

Note that each provider provides slightly different JSON output. Therefore

|

||||

the macro simply extracts the JSON and assigns the libname (using the JSON

|

||||

engine).

|

||||

|

||||

Example usage (eg, to grab latest release version from github):

|

||||

|

||||

%mp_gitreleaseinfo(GITHUB,sasjs/core,outlib=mylibref)

|

||||

|

||||

data _null_;

|

||||

set mylibref.root;

|

||||

putlog TAG_NAME=;

|

||||

run;

|

||||

|

||||

@param [in] provider The GIT provider for the release info. Accepted values:

|

||||

@li GITLAB

|

||||

@li GITHUB - Tables include root, assets, author, alldata

|

||||

@param [in] project The link to the repository. This has different formats

|

||||

depending on the vendor:

|

||||

@li GITHUB - org/repo, eg sasjs/core

|

||||

@li GITLAB - project, eg 1343223

|

||||

@param [in] server= (0) If your repo is self-hosted, then provide the domain

|

||||

here. Otherwise it will default to the provider domain (eg gitlab.com).

|

||||

@param [in] mdebug= (0) Set to 1 to enable DEBUG messages

|

||||

@param [out] outlib= (GITREL) The JSON-engine libref to be created, which will

|

||||

point at the returned JSON

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getuniquefileref.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_gitreleaseinfo.test.sas

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_gitreleaseinfo(provider,project,server=0,outlib=GITREL,mdebug=0);

|

||||

%local url fref;

|

||||

|

||||

%let provider=%upcase(&provider);

|

||||

|

||||

%if &provider=GITHUB %then %do;

|

||||

%if "&server"="0" %then %let server=https://api.github.com;

|

||||

%let url=&server/repos/&project/releases/latest;

|

||||

%end;

|

||||

%else %if &provider=GITLAB %then %do;

|

||||

%if "&server"="0" %then %let server=https://gitlab.com;

|

||||

%let url=&server/api/v4/projects/&project/releases;

|

||||

%end;

|

||||

|

||||

%let fref=%mf_getuniquefileref();

|

||||

|

||||

proc http method='GET' out=&fref url="&url";

|

||||

%if &mdebug=1 %then %do;

|

||||

debug level = 3;

|

||||

%end;

|

||||

run;

|

||||

|

||||

libname &outlib JSON fileref=&fref;

|

||||

|

||||

%if &mdebug=1 %then %do;

|

||||

data _null_;

|

||||

infile &fref;

|

||||

input;

|

||||

putlog _infile_;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_gitreleaseinfo;

|

||||

/**

|

||||

@file

|

||||

@brief Performs a text substitution on a file

|

||||

@@ -8497,7 +8644,7 @@ run;

|

||||

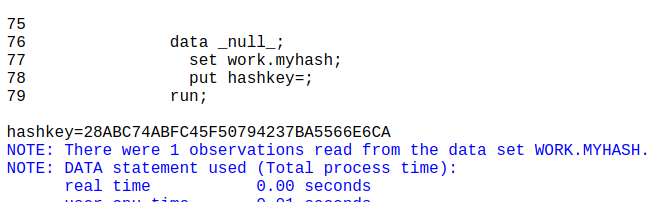

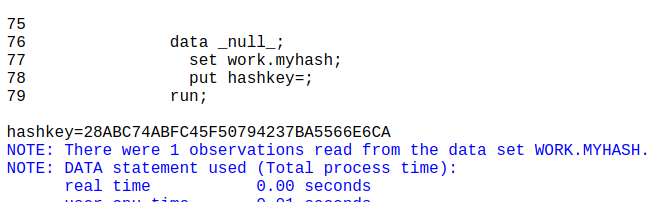

put hashkey=;

|

||||

run;

|

||||

|

||||

|

||||

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getattrn.sas

|

||||

@@ -8507,11 +8654,12 @@ run;

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.test.sas

|

||||

@li mp_hashdirectory.sas

|

||||

|

||||

@param [in] libds dataset to hash

|

||||

@param [in] salt= Provide a salt (could be, for instance, the dataset name)

|

||||

@param [in] iftrue= A condition under which the macro should be executed.

|

||||

@param [out] outds= (work.mf_hashdataset) The output dataset to create. This

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [out] outds= (work._data_) The output dataset to create. This

|

||||

will contain one column (hashkey) with one observation (a $hex32.

|

||||

representation of the input hash)

|

||||

|hashkey:$32.|

|

||||

@@ -8574,6 +8722,168 @@ run;

|

||||

run;

|

||||

%end;

|

||||

%mend mp_hashdataset;

|

||||

/**

|

||||

@file

|

||||

@brief Returns a unique hash for each file in a directory

|

||||

@details Hashes each file in each directory, and then hashes the hashes to

|

||||

create a hash for each directory also.

|

||||

|

||||

This makes use of the new `hashing_file()` and `hashing` functions, available

|

||||

since 9.4m6. Interestingly, these can even be used in pure macro, eg:

|

||||

|

||||

%put %sysfunc(hashing_file(md5,/path/to/file.blob,0));

|

||||

|

||||

Actual usage:

|

||||

|

||||

%let fpath=/some/directory;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=myhash,maxdepth=2)

|

||||

|

||||

data _null_;

|

||||

set work.myhash;

|

||||

put (_all_)(=);

|

||||

run;

|

||||

|

||||

Whilst files are hashed in their entirety, the logic for creating a folder

|

||||

hash is as follows:

|

||||

|

||||

@li Sort the files by filename (case sensitive, uppercase then lower)

|

||||

@li Take the first 100 hashes, concatenate and hash

|

||||

@li Concatenate this hash with another 100 hashes and hash again

|

||||

@li Continue until the end of the folder. This is the folder hash

|

||||

@li If a folder contains other folders, start from the bottom of the tree -

|

||||

the folder hashes cascade upwards so you know immediately if there is a

|

||||

change in a sub/sub directory

|

||||

@li If the folder has no content (empty) then it is ignored. No hash created.

|

||||

@li If the file is empty, it is also ignored / no hash created.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dirlist.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.sas

|

||||

@li mp_hashdirectory.test.sas

|

||||

@li mp_md5.sas

|

||||

|

||||

@param [in] inloc Full filepath of the file to be hashed (unquoted)

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] method= (MD5) the hashing method to use. Available options:

|

||||

@li MD5

|

||||

@li SH1

|

||||

@li SHA256

|

||||

@li SHA384

|

||||

@li SHA512

|

||||

@li CRC32

|

||||

@param [out] outds= (work.mp_hashdirectory) The output dataset. Contains:

|

||||

@li directory - the parent folder

|

||||

@li file_hash - the hash output

|

||||

@li hash_duration - how long the hash took (first hash always takes longer)

|

||||

@li file_path - /full/path/to/each/file.ext

|

||||

@li file_or_folder - contains either "file" or "folder"

|

||||

@li level - the depth of the directory (top level is 0)

|

||||

|

||||

@version 9.4m6

|

||||

@author Allan Bowe

|

||||

**/

|

||||

|

||||

%macro mp_hashdirectory(inloc,

|

||||

outds=work.mp_hashdirectory,

|

||||

method=MD5,

|

||||

maxdepth=0,

|

||||

iftrue=%str(1=1)

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

%local curlevel tempds ;

|

||||

|

||||

%if not(%eval(%unquote(&iftrue))) %then %return;

|

||||

|

||||

/* get the directory listing */

|

||||

%mp_dirlist(path=&inloc, outds=&outds, maxdepth=&maxdepth, showparent=YES)

|

||||

|

||||

/* create the hashes */

|

||||

data &outds;

|

||||

set &outds (rename=(filepath=file_path));

|

||||

length FILE_HASH $32 HASH_DURATION 8;

|

||||

keep directory file_hash hash_duration file_path file_or_folder level;

|

||||

|

||||

ts=datetime();

|

||||

if file_or_folder='file' then do;

|

||||

/* if file is empty, hashing_file will break - so ignore / delete */

|

||||

length fname val $8;

|

||||

drop fname val fid is_empty;

|

||||

rc=filename(fname,file_path);

|

||||

fid=fopen(fname);

|

||||

if fid > 0 then do;

|

||||

rc=fread(fid);

|

||||

is_empty=fget(fid,val);

|

||||

end;

|

||||

rc=fclose(fid);

|

||||

rc=filename(fname);

|

||||

if is_empty ne 0 then delete;

|

||||

else file_hash=hashing_file("&method",cats(file_path),0);

|

||||

end;

|

||||

hash_duration=datetime()-ts;

|

||||

run;

|

||||

|

||||

proc sort data=&outds ;

|

||||

by descending level directory file_path;

|

||||

run;

|

||||

|

||||

data _null_;

|

||||

set &outds;

|

||||

call symputx('maxlevel',level,'l');

|

||||

stop;

|

||||

run;

|

||||

|

||||

/* now hash the hashes to populate folder hashes, starting from the bottom */

|

||||

%do curlevel=&maxlevel %to 0 %by -1;

|

||||

data work._data_ (keep=directory file_hash);

|

||||

set &outds;

|

||||

where level=&curlevel;

|

||||

by descending level directory file_path;

|

||||

length str $32767 tmp_hash $32;

|

||||

retain str tmp_hash ;

|

||||

/* reset vars when starting a new directory */

|

||||

if first.directory then do;

|

||||

str='';

|

||||

tmp_hash='';

|

||||

i=0;

|

||||

end;

|

||||

/* hash each chunk of 100 file paths */

|

||||

i+1;

|

||||

str=cats(str,file_hash);

|

||||

if mod(i,100)=0 or last.directory then do;

|

||||

tmp_hash=hashing("&method",cats(tmp_hash,str));

|

||||

str='';

|

||||

end;

|

||||

/* output the hash at directory level */

|

||||

if last.directory then do;

|

||||

file_hash=tmp_hash;

|

||||

output;

|

||||

end;

|

||||

if last.level then stop;

|

||||

run;

|

||||

%let tempds=&syslast;

|

||||

/* join the hash back into the main table */

|

||||

proc sql undo_policy=none;

|

||||

create table &outds as

|

||||

select a.directory

|

||||

,coalesce(b.file_hash,a.file_hash) as file_hash

|

||||

,a.hash_duration

|

||||

,a.file_path

|

||||

,a.file_or_folder

|

||||

,a.level

|

||||

from &outds a

|

||||

left join &tempds b

|

||||

on a.file_path=b.directory

|

||||

order by level desc, directory, file_path;

|

||||

drop table &tempds;

|

||||

%end;

|

||||

|

||||

%mend mp_hashdirectory;

|

||||

/**

|

||||

@file

|

||||

@brief Performs a wrapped \%include

|

||||

@@ -21934,6 +22244,66 @@ run;

|

||||

%end;

|

||||

|

||||

%mend mfv_existfolder;/**

|

||||

@file mfv_existsashdat.sas

|

||||

@brief Checks whether a CAS sashdat dataset exists in persistent storage.

|

||||

@details Can be used in open code, eg as follows:

|

||||

|

||||

%if %mfv_existsashdat(libds=casuser.sometable) %then %put yes it does!;

|

||||

|

||||

The function uses `dosubl()` to run the `table.fileinfo` action, for the

|

||||

specified library, filtering for `*.sashdat` tables. The results are stored

|

||||

in a WORK table (&outprefix._&lib). If that table already exists, it is

|

||||

queried instead, to avoid the dosubl() performance hit.

|

||||

|

||||

To force a rescan, just use a new `&outprefix` value, or delete the table(s)

|

||||

before running the function.

|

||||

|

||||

@param libds library.dataset

|

||||

@param outprefix= (work.mfv_existsashdat) Used to store the current HDATA

|

||||

tables to improve subsequent query performance. This reference is a prefix

|

||||

and is converted to `&prefix._{libref}`

|

||||

|

||||

@return output returns 1 or 0

|

||||

|

||||

@version 0.2

|

||||

@author Mathieu Blauw

|

||||

**/

|

||||

|

||||

%macro mfv_existsashdat(libds,outprefix=work.mfv_existsashdat

|

||||

);

|

||||

%local rc dsid name lib ds;

|

||||

%let lib=%upcase(%scan(&libds,1,'.'));

|

||||

%let ds=%upcase(%scan(&libds,-1,'.'));

|

||||

|

||||

/* if table does not exist, create it */

|

||||

%if %sysfunc(exist(&outprefix._&lib)) ne 1 %then %do;

|

||||

%let rc=%sysfunc(dosubl(%nrstr(

|

||||

/* Read in table list (once per &lib per session) */

|

||||

proc cas;

|

||||

table.fileinfo result=source_list /caslib="&lib";

|

||||

val=findtable(source_list);

|

||||

saveresult val dataout=&outprefix._&lib;

|

||||

quit;

|

||||

/* Only keep name, without file extension */

|

||||

data &outprefix._&lib;

|

||||

set &outprefix._&lib(where=(Name like '%.sashdat') keep=Name);

|

||||

Name=upcase(scan(Name,1,'.'));

|

||||

run;

|

||||

)));

|

||||

%end;

|

||||

|

||||

/* Scan table for hdat existence */

|

||||

%let dsid=%sysfunc(open(&outprefix._&lib(where=(name="&ds"))));

|

||||

%syscall set(dsid);

|

||||

%let rc = %sysfunc(fetch(&dsid));

|

||||

%let rc = %sysfunc(close(&dsid));

|

||||

|

||||

/* Return result */

|

||||

%if "%trim(&name)"="%trim(&ds)" %then 1;

|

||||

%else 0;

|

||||

|

||||

%mend mfv_existsashdat;

|

||||

/**

|

||||

@file

|

||||

@brief Creates a file in SAS Drive

|

||||

@details Creates a file in SAS Drive and adds the appropriate content type.

|

||||

@@ -21959,7 +22329,8 @@ run;

|

||||

@param [in] contentdisp= (inline) Content Disposition. Example values:

|

||||

@li inline

|

||||

@li attachment

|

||||

|

||||

@param [in] ctype= (0) Set a default HTTP Content-Type header to be returned

|

||||

with the file when the content is retrieved from the Files service.

|

||||

@param [in] access_token_var= The global macro variable to contain the access

|

||||

token, if using authorization_code grant type.

|

||||

@param [in] grant_type= (sas_services) Valid values are:

|

||||

@@ -21987,6 +22358,7 @@ run;

|

||||

,inref=

|

||||

,intype=BINARY

|

||||

,contentdisp=inline

|

||||

,ctype=0

|

||||

,access_token_var=ACCESS_TOKEN

|

||||

,grant_type=sas_services

|

||||

,mdebug=0

|

||||

@@ -22038,8 +22410,10 @@ filename &fref filesrvc

|

||||

folderPath="&path"

|

||||

filename="&name"

|

||||

cdisp="&contentdisp"

|

||||

%if "&ctype" ne "0" %then %do;

|

||||

ctype="&ctype"

|

||||

%end;

|

||||

lrecl=1048544;

|

||||

|

||||

%if &intype=BINARY %then %do;

|

||||

%mp_binarycopy(inref=&inref, outref=&fref)

|

||||

%end;

|

||||

|

||||

@@ -18,11 +18,14 @@

|

||||

%mp_deleteconstraints(inds=work.constraints,outds=dropped,execute=YES)

|

||||

%mp_createconstraints(inds=work.constraints,outds=created,execute=YES)

|

||||

|

||||

@param inds= The input table containing the constraint info

|

||||

@param outds= a table containing the create statements (create_statement column)

|

||||

@param execute= `YES|NO` - default is NO. To actually create, use YES.

|

||||

@param inds= (work.mp_getconstraints) The input table containing the

|

||||

constraint info

|

||||

@param outds= (work.mp_createconstraints) A table containing the create

|

||||

statements (create_statement column)

|

||||

@param execute= (NO) To actually create, use YES.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

<h4> Related Files </h4>

|

||||

@li mp_getconstraints.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

@@ -30,7 +33,7 @@

|

||||

**/

|

||||

|

||||

%macro mp_createconstraints(inds=mp_getconstraints

|

||||

,outds=mp_createconstraints

|

||||

,outds=work.mp_createconstraints

|

||||

,execute=NO

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

@@ -64,4 +67,4 @@ data &outds;

|

||||

output;

|

||||

run;

|

||||

|

||||

%mend mp_createconstraints;

|

||||

%mend mp_createconstraints;

|

||||

|

||||

52

base/mp_dictionary.sas

Normal file

52

base/mp_dictionary.sas

Normal file

@@ -0,0 +1,52 @@

|

||||

/**

|

||||

@file mp_dictionary.sas

|

||||

@brief Creates a portal (libref) into the SQL Dictionary Views

|

||||

@details Provide a libref and the macro will create a series of views against

|

||||

each view in the special PROC SQL dictionary libref.

|

||||

|

||||

This is useful if you would like to visualise (navigate) the views in a SAS

|

||||

client such as Base SAS, Enterprise Guide, or Studio (or [Data Controller](

|

||||

https://datacontroller.io)).

|

||||

|

||||

It works by extracting the dictionary.dictionaries view into

|

||||

YOURLIB.dictionaries, then uses that to create a YOURLIB.{viewName} for every

|

||||

other dictionary.view, eg:

|

||||

|

||||

proc sql;

|

||||

create view YOURLIB.columns as select * from dictionary.columns;

|

||||

|

||||

Usage:

|

||||

|

||||

libname demo "/lib/directory";

|

||||

%mp_dictionary(lib=demo)

|

||||

|

||||

Or, to just create them in WORK:

|

||||

|

||||

%mp_dictionary()

|

||||

|

||||

If you'd just like to browse the dictionary data model, you can also check

|

||||

out [this article](https://rawsas.com/dictionary-of-dictionaries/).

|

||||

|

||||

|

||||

|

||||

@param lib= (WORK) The libref in which to create the views

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_dictionary.test.sas

|

||||

|

||||

@version 9.2

|

||||

@author Allan Bowe

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_dictionary(lib=WORK)/*/STORE SOURCE*/;

|

||||

%local list i mem;

|

||||

proc sql noprint;

|

||||

create view &lib..dictionaries as select * from dictionary.dictionaries;

|

||||

select distinct memname into: list separated by ' ' from &lib..dictionaries;

|

||||

%do i=1 %to %sysfunc(countw(&list,%str( )));

|

||||

%let mem=%scan(&list,&i,%str( ));

|

||||

create view &lib..&mem as select * from dictionary.&mem;

|

||||

%end;

|

||||

quit;

|

||||

%mend mp_dictionary;

|

||||

@@ -27,6 +27,9 @@

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] showparent= (NO) By default, the initial parent directory is not

|

||||

part of the results. Set to YES to include it. For this record only,

|

||||

directory=filepath.

|

||||

@param [out] outds= (work.mp_dirlist) The output dataset to create

|

||||

@param [out] getattrs= (NO) If getattrs=YES then the doptname / foptname

|

||||

functions are used to scan all properties - any characters that are not

|

||||

@@ -63,6 +66,7 @@

|

||||

, fref=0

|

||||

, outds=work.mp_dirlist

|

||||

, getattrs=NO

|

||||

, showparent=NO

|

||||

, maxdepth=0

|

||||

, level=0 /* The level of recursion to perform. For internal use only. */

|

||||

)/*/STORE SOURCE*/;

|

||||

@@ -145,6 +149,15 @@ data &out_ds(compress=no

|

||||

output;

|

||||

end;

|

||||

rc = dclose(did);

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

filepath=directory;

|

||||

file_or_folder='folder';

|

||||

ext='';

|

||||

filename=scan(directory,-1,'/\');

|

||||

msg='';

|

||||

level=&level;

|

||||

output;

|

||||

%end;

|

||||

stop;

|

||||

run;

|

||||

|

||||

@@ -232,6 +245,9 @@ run;

|

||||

data _null_;

|

||||

set &out_ds;

|

||||

where file_or_folder='folder';

|

||||

%if &showparent=YES and &level=0 %then %do;

|

||||

if filepath ne directory;

|

||||

%end;

|

||||

length code $10000;

|

||||

code=cats('%nrstr(%mp_dirlist(path=',filepath,",outds=&outds"

|

||||

,",getattrs=&getattrs,level=%eval(&level+1),maxdepth=&maxdepth))");

|

||||

|

||||

@@ -92,7 +92,7 @@ data _null_;

|

||||

run;

|

||||

|

||||

%if %upcase(&showlog)=YES %then %do;

|

||||

options ps=max;

|

||||

options ps=max lrecl=max;

|

||||

data _null_;

|

||||

infile &outref;

|

||||

input;

|

||||

@@ -100,4 +100,4 @@ run;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_ds2md;

|

||||

%mend mp_ds2md;

|

||||

|

||||

46

base/mp_gitadd.sas

Normal file

46

base/mp_gitadd.sas

Normal file

@@ -0,0 +1,46 @@

|

||||

/**

|

||||

@file

|

||||

@brief Stages files in a GIT repo

|

||||

@details Uses the output dataset from mp_gitstatus.sas to determine the files

|

||||

that should be staged.

|

||||

|

||||

If STAGED != `"TRUE"` then the file is staged (so you could provide an empty

|

||||

char column if staging all observations).

|

||||

|

||||

Usage:

|

||||

|

||||

%let dir=%sysfunc(pathname(work))/core;

|

||||

%let repo=https://github.com/sasjs/core;

|

||||

%put source clone rc=%sysfunc(GITFN_CLONE(&repo,&dir));

|

||||

%mf_writefile(&dir/somefile.txt,l1=some content)

|

||||

%mf_deletefile(&dir/package.json)

|

||||

%mp_gitstatus(&dir,outds=work.gitstatus)

|

||||

|

||||

%mp_gitadd(&dir,inds=work.gitstatus)

|

||||

|

||||

@param [in] gitdir The directory containing the GIT repository

|

||||

@param [in] inds= (work.mp_gitadd) The input dataset with the list of files

|

||||

to stage. Will accept the output from mp_gitstatus(), else just use a table

|

||||

with the following columns:

|

||||

@li path $1024 - relative path to the file in the repo

|

||||

@li staged $32 - whether the file is staged (TRUE or FALSE)

|

||||

@li status $64 - either new, deleted, or modified

|

||||

|

||||

@param [in] mdebug= (0) Set to 1 to enable DEBUG messages

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_gitadd.test.sas

|

||||

@li mp_gitstatus.sas

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_gitadd(gitdir,inds=work.mp_gitadd,mdebug=0);

|

||||

|

||||

data _null_;

|

||||

set &inds;

|

||||

if STAGED ne "TRUE";

|

||||

rc=git_index_add("&gitdir",cats(path),status);

|

||||

if rc ne 0 or &mdebug=1 then put rc=;

|

||||

run;

|

||||

|

||||

%mend mp_gitadd;

|

||||

74

base/mp_gitreleaseinfo.sas

Normal file

74

base/mp_gitreleaseinfo.sas

Normal file

@@ -0,0 +1,74 @@

|

||||

/**

|

||||

@file

|

||||

@brief Pulls latest release info from a GIT repository

|

||||

@details Useful for grabbing the latest version number or other attributes

|

||||

from a GIT server. Supported providers are GitLab and GitHub. Pull requests

|

||||

are welcome if you'd like to see additional providers!

|

||||

|

||||

Note that each provider provides slightly different JSON output. Therefore

|

||||

the macro simply extracts the JSON and assigns the libname (using the JSON

|

||||

engine).

|

||||

|

||||

Example usage (eg, to grab latest release version from github):

|

||||

|

||||

%mp_gitreleaseinfo(GITHUB,sasjs/core,outlib=mylibref)

|

||||

|

||||

data _null_;

|

||||

set mylibref.root;

|

||||

putlog TAG_NAME=;

|

||||

run;

|

||||

|

||||

@param [in] provider The GIT provider for the release info. Accepted values:

|

||||

@li GITLAB

|

||||

@li GITHUB - Tables include root, assets, author, alldata

|

||||

@param [in] project The link to the repository. This has different formats

|

||||

depending on the vendor:

|

||||

@li GITHUB - org/repo, eg sasjs/core

|

||||

@li GITLAB - project, eg 1343223

|

||||

@param [in] server= (0) If your repo is self-hosted, then provide the domain

|

||||

here. Otherwise it will default to the provider domain (eg gitlab.com).

|

||||

@param [in] mdebug= (0) Set to 1 to enable DEBUG messages

|

||||

@param [out] outlib= (GITREL) The JSON-engine libref to be created, which will

|

||||

point at the returned JSON

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getuniquefileref.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_gitreleaseinfo.test.sas

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_gitreleaseinfo(provider,project,server=0,outlib=GITREL,mdebug=0);

|

||||

%local url fref;

|

||||

|

||||

%let provider=%upcase(&provider);

|

||||

|

||||

%if &provider=GITHUB %then %do;

|

||||

%if "&server"="0" %then %let server=https://api.github.com;

|

||||

%let url=&server/repos/&project/releases/latest;

|

||||

%end;

|

||||

%else %if &provider=GITLAB %then %do;

|

||||

%if "&server"="0" %then %let server=https://gitlab.com;

|

||||

%let url=&server/api/v4/projects/&project/releases;

|

||||

%end;

|

||||

|

||||

%let fref=%mf_getuniquefileref();

|

||||

|

||||

proc http method='GET' out=&fref url="&url";

|

||||

%if &mdebug=1 %then %do;

|

||||

debug level = 3;

|

||||

%end;

|

||||

run;

|

||||

|

||||

libname &outlib JSON fileref=&fref;

|

||||

|

||||

%if &mdebug=1 %then %do;

|

||||

data _null_;

|

||||

infile &fref;

|

||||

input;

|

||||

putlog _infile_;

|

||||

run;

|

||||

%end;

|

||||

|

||||

%mend mp_gitreleaseinfo;

|

||||

67

base/mp_gitstatus.sas

Normal file

67

base/mp_gitstatus.sas

Normal file

@@ -0,0 +1,67 @@

|

||||

/**

|

||||

@file

|

||||

@brief Creates a dataset with the output from `GIT_STATUS()`

|

||||

@details Uses `git_status()` to fetch the number of changed files, then

|

||||

iterates through with `git_status_get()` and `git_index_add()` for each

|

||||

change - which is created in an output dataset.

|

||||

|

||||

Usage:

|

||||

|

||||

%let dir=%sysfunc(pathname(work))/core;

|

||||

%let repo=https://github.com/sasjs/core;

|

||||

%put source clone rc=%sysfunc(GITFN_CLONE(&repo,&dir));

|

||||

%mf_writefile(&dir/somefile.txt,l1=some content)

|

||||

%mf_deletefile(&dir/package.json)

|

||||

|

||||

%mp_gitstatus(&dir,outds=work.gitstatus)

|

||||

|

||||

More info on these functions is in this [helpful paper](

|

||||

https://www.sas.com/content/dam/SAS/support/en/sas-global-forum-proceedings/2019/3057-2019.pdf

|

||||

) by Danny Zimmerman.

|

||||

|

||||

@param [in] gitdir The directory containing the GIT repository

|

||||

@param [out] outds= (work.git_status) The output dataset to create. Vars:

|

||||

@li gitdir $1024 - directory of repo

|

||||

@li path $1024 - relative path to the file in the repo

|

||||

@li staged $32 - whether the file is staged (TRUE or FALSE)

|

||||

@li status $64 - either new, deleted, or modified

|

||||

@li cnt - number of files

|

||||

@li n - the "nth" file in the list from git_status()

|

||||

|

||||

@param [in] mdebug= (0) Set to 1 to enable DEBUG messages

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_gitstatus.test.sas

|

||||

@li mp_gitadd.sas

|

||||

|

||||

**/

|

||||

|

||||

%macro mp_gitstatus(gitdir,outds=work.mp_gitstatus,mdebug=0);

|

||||

|

||||

data &outds;

|

||||

LENGTH gitdir path $ 1024 STATUS $ 64 STAGED $ 32;

|

||||

call missing (of _all_);

|

||||

gitdir=symget('gitdir');

|

||||

cnt=git_status(trim(gitdir));

|

||||

if cnt=-1 then do;

|

||||

put "The libgit2 library is unavailable and no Git operations can be used.";

|

||||

put "See: https://stackoverflow.com/questions/74082874";

|

||||

end;

|

||||

else if cnt=-2 then do;

|

||||

put "The libgit2 library is available, but the status function failed.";

|

||||

put "See the log for details.";

|

||||

end;

|

||||

else do n=1 to cnt;

|

||||

rc=GIT_STATUS_GET(n,gitdir,'PATH',path);

|

||||

rc=GIT_STATUS_GET(n,gitdir,'STAGED',staged);

|

||||

rc=GIT_STATUS_GET(n,gitdir,'STATUS',status);

|

||||

output;

|

||||

%if &mdebug=1 %then %do;

|

||||

putlog (_all_)(=);

|

||||

%end;

|

||||

end;

|

||||

rc=git_status_free(gitdir);

|

||||

drop rc cnt;

|

||||

run;

|

||||

|

||||

%mend mp_gitstatus;

|

||||

@@ -11,7 +11,7 @@

|

||||

put hashkey=;

|

||||

run;

|

||||

|

||||

|

||||

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_getattrn.sas

|

||||

@@ -21,11 +21,12 @@

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.test.sas

|

||||

@li mp_hashdirectory.sas

|

||||

|

||||

@param [in] libds dataset to hash

|

||||

@param [in] salt= Provide a salt (could be, for instance, the dataset name)

|

||||

@param [in] iftrue= A condition under which the macro should be executed.

|

||||

@param [out] outds= (work.mf_hashdataset) The output dataset to create. This

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [out] outds= (work._data_) The output dataset to create. This

|

||||

will contain one column (hashkey) with one observation (a $hex32.

|

||||

representation of the input hash)

|

||||

|hashkey:$32.|

|

||||

|

||||

162

base/mp_hashdirectory.sas

Normal file

162

base/mp_hashdirectory.sas

Normal file

@@ -0,0 +1,162 @@

|

||||

/**

|

||||

@file

|

||||

@brief Returns a unique hash for each file in a directory

|

||||

@details Hashes each file in each directory, and then hashes the hashes to

|

||||

create a hash for each directory also.

|

||||

|

||||

This makes use of the new `hashing_file()` and `hashing` functions, available

|

||||

since 9.4m6. Interestingly, these can even be used in pure macro, eg:

|

||||

|

||||

%put %sysfunc(hashing_file(md5,/path/to/file.blob,0));

|

||||

|

||||

Actual usage:

|

||||

|

||||

%let fpath=/some/directory;

|

||||

|

||||

%mp_hashdirectory(&fpath,outds=myhash,maxdepth=2)

|

||||

|

||||

data _null_;

|

||||

set work.myhash;

|

||||

put (_all_)(=);

|

||||

run;

|

||||

|

||||

Whilst files are hashed in their entirety, the logic for creating a folder

|

||||

hash is as follows:

|

||||

|

||||

@li Sort the files by filename (case sensitive, uppercase then lower)

|

||||

@li Take the first 100 hashes, concatenate and hash

|

||||

@li Concatenate this hash with another 100 hashes and hash again

|

||||

@li Continue until the end of the folder. This is the folder hash

|

||||

@li If a folder contains other folders, start from the bottom of the tree -

|

||||

the folder hashes cascade upwards so you know immediately if there is a

|

||||

change in a sub/sub directory

|

||||

@li If the folder has no content (empty) then it is ignored. No hash created.

|

||||

@li If the file is empty, it is also ignored / no hash created.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dirlist.sas

|

||||

|

||||

<h4> Related Files </h4>

|

||||

@li mp_hashdataset.sas

|

||||

@li mp_hashdirectory.test.sas

|

||||

@li mp_md5.sas

|

||||

|

||||

@param [in] inloc Full filepath of the file to be hashed (unquoted)

|

||||

@param [in] iftrue= (1=1) A condition under which the macro should be executed

|

||||

@param [in] maxdepth= (0) Set to a positive integer to indicate the level of

|

||||

subdirectory scan recursion - eg 3, to go `./3/levels/deep`. For unlimited

|

||||

recursion, set to MAX.

|

||||

@param [in] method= (MD5) the hashing method to use. Available options:

|

||||

@li MD5

|

||||

@li SH1

|

||||

@li SHA256

|

||||

@li SHA384

|

||||

@li SHA512

|

||||

@li CRC32

|

||||

@param [out] outds= (work.mp_hashdirectory) The output dataset. Contains:

|

||||

@li directory - the parent folder

|

||||

@li file_hash - the hash output

|

||||

@li hash_duration - how long the hash took (first hash always takes longer)

|

||||

@li file_path - /full/path/to/each/file.ext

|

||||

@li file_or_folder - contains either "file" or "folder"

|

||||

@li level - the depth of the directory (top level is 0)

|

||||

|

||||

@version 9.4m6

|

||||

@author Allan Bowe

|

||||

**/

|

||||

|

||||

%macro mp_hashdirectory(inloc,

|

||||

outds=work.mp_hashdirectory,

|

||||

method=MD5,

|

||||

maxdepth=0,

|

||||

iftrue=%str(1=1)

|

||||

)/*/STORE SOURCE*/;

|

||||

|

||||

%local curlevel tempds ;

|

||||

|

||||

%if not(%eval(%unquote(&iftrue))) %then %return;

|

||||

|

||||

/* get the directory listing */

|

||||

%mp_dirlist(path=&inloc, outds=&outds, maxdepth=&maxdepth, showparent=YES)

|

||||

|

||||

/* create the hashes */

|

||||

data &outds;

|

||||

set &outds (rename=(filepath=file_path));

|

||||

length FILE_HASH $32 HASH_DURATION 8;

|

||||

keep directory file_hash hash_duration file_path file_or_folder level;

|

||||

|

||||

ts=datetime();

|

||||

if file_or_folder='file' then do;

|

||||

/* if file is empty, hashing_file will break - so ignore / delete */

|

||||

length fname val $8;

|

||||

drop fname val fid is_empty;

|

||||

rc=filename(fname,file_path);

|

||||

fid=fopen(fname);

|

||||

if fid > 0 then do;

|

||||

rc=fread(fid);

|

||||

is_empty=fget(fid,val);

|

||||

end;

|

||||

rc=fclose(fid);

|

||||

rc=filename(fname);

|

||||

if is_empty ne 0 then delete;

|

||||

else file_hash=hashing_file("&method",cats(file_path),0);

|

||||

end;

|

||||

hash_duration=datetime()-ts;

|

||||

run;

|

||||

|

||||

proc sort data=&outds ;

|

||||

by descending level directory file_path;

|

||||

run;

|

||||

|

||||

data _null_;

|

||||

set &outds;

|

||||

call symputx('maxlevel',level,'l');

|

||||

stop;

|

||||

run;

|

||||

|

||||

/* now hash the hashes to populate folder hashes, starting from the bottom */

|

||||

%do curlevel=&maxlevel %to 0 %by -1;

|

||||

data work._data_ (keep=directory file_hash);

|

||||

set &outds;

|

||||

where level=&curlevel;

|

||||

by descending level directory file_path;

|

||||

length str $32767 tmp_hash $32;

|

||||

retain str tmp_hash ;

|

||||

/* reset vars when starting a new directory */

|

||||

if first.directory then do;

|

||||

str='';

|

||||

tmp_hash='';

|

||||

i=0;

|

||||

end;

|

||||

/* hash each chunk of 100 file paths */

|

||||

i+1;

|

||||

str=cats(str,file_hash);

|

||||

if mod(i,100)=0 or last.directory then do;

|

||||

tmp_hash=hashing("&method",cats(tmp_hash,str));

|

||||

str='';

|

||||

end;

|

||||

/* output the hash at directory level */

|

||||

if last.directory then do;

|

||||

file_hash=tmp_hash;

|

||||

output;

|

||||

end;

|

||||

if last.level then stop;

|

||||

run;

|

||||

%let tempds=&syslast;

|

||||

/* join the hash back into the main table */

|

||||

proc sql undo_policy=none;

|

||||

create table &outds as

|

||||

select a.directory

|

||||

,coalesce(b.file_hash,a.file_hash) as file_hash

|

||||

,a.hash_duration

|

||||

,a.file_path

|

||||

,a.file_or_folder

|

||||

,a.level

|

||||

from &outds a

|

||||

left join &tempds b

|

||||

on a.file_path=b.directory

|

||||

order by level desc, directory, file_path;

|

||||

drop table &tempds;

|

||||

%end;

|

||||

|

||||

%mend mp_hashdirectory;

|

||||

@@ -73,6 +73,10 @@

|

||||

"allowInsecureRequests": false

|

||||

},

|

||||

"appLoc": "/sasjs/core",

|

||||

"deployConfig": {

|

||||

"deployServicePack": true,

|

||||

"deployScripts": []

|

||||

},

|

||||

"macroFolders": [

|

||||

"server",

|

||||

"tests/serveronly"

|

||||

@@ -105,6 +109,16 @@

|

||||

"deployServicePack": true

|

||||

},

|

||||

"contextName": "SAS Job Execution compute context"

|

||||

},

|

||||

{

|

||||

"name": "sasjs9",

|

||||

"serverUrl": "https://sas9.4gl.io",

|

||||

"serverType": "SASJS",

|

||||

"appLoc": "/Public/app/sasjs9",

|

||||

"deployConfig": {

|

||||

"deployServicePack": true,

|

||||

"deployScripts": []

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

224

sasjs/utils/create_sas_package.sas

Normal file

224

sasjs/utils/create_sas_package.sas

Normal file

@@ -0,0 +1,224 @@

|

||||

/**

|

||||

@file

|

||||

@brief Deploy repo as a SAS PACKAGES module

|

||||

@details After every release, this program is executed to update the SASPAC

|

||||

repo with the latest macros (and same version number).

|

||||

The program is first compiled using sasjs compile, then executed using

|

||||

sasjs run.

|

||||

|

||||

Requires the server to have SSH keys.

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_gitadd.sas

|

||||

@li mp_gitreleaseinfo.sas

|

||||

@li mp_gitstatus.sas

|

||||

|

||||

**/

|

||||

|

||||

|

||||

/* get package version */

|

||||

%mp_gitreleaseinfo(GITHUB,sasjs/core,outlib=splib)

|

||||

data _null_;

|

||||

set splib.root;

|

||||

call symputx('version',TAG_NAME);

|

||||

run;

|

||||

|

||||

/* clone the source repo */

|

||||

%let dir = %sysfunc(pathname(work))/core;

|

||||

%put source clone rc=%sysfunc(GITFN_CLONE(https://github.com/sasjs/core,&dir));

|

||||

|

||||

|

||||

/*

|

||||

clone the target repo.

|

||||

If you have issues, see: https://stackoverflow.com/questions/74082874

|

||||

*/

|

||||

options dlcreatedir;

|

||||

libname _ "&dirOut.";

|

||||

%let dirOut = %sysfunc(pathname(work))/package;

|

||||

%put tgt clone rc=%sysfunc(GITFN_CLONE(

|

||||

git@github.com:allanbowe/sasjscore.git,

|

||||

&dirOut,

|

||||

git,

|

||||

%str( ),

|

||||

/home/sasjssrv/.ssh/id_ecdsa.pub,

|

||||

/home/sasjssrv/.ssh/id_ecdsa

|

||||

));

|

||||

|

||||

|

||||

/*

|

||||

Prepare Package Metadata

|

||||

*/

|

||||

data _null_;

|

||||

infile CARDS4;

|

||||

file "&dirOut./description.sas";

|

||||

input;

|

||||

if _infile_ =: 'Version:' then put "Version: &version.";

|

||||

else put _infile_;

|

||||

CARDS4;

|

||||

Type: Package

|

||||

Package: SASjsCore

|

||||

Title: SAS Macros for Application Development

|

||||

Version: $(PLACEHOLDER)

|

||||

Author: Allan Bowe

|

||||

Maintainer: 4GL Ltd

|

||||

License: MIT

|

||||

Encoding: UTF8

|

||||

|

||||

DESCRIPTION START:

|

||||

|

||||

The SASjs Macro Core library is a component of the SASjs framework, the

|

||||

source for which is avaible here: https://github.com/sasjs

|

||||

|

||||

Macros are divided by:

|

||||

|

||||

* Macro Functions (prefix mf_)

|

||||

* Macro Procedures (prefix mp_)

|

||||

* Macros for Metadata (prefix mm_)

|

||||

* Macros for SASjs Server (prefix ms_)

|

||||

* Macros for Viya (prefix mv_)

|

||||

|

||||

DESCRIPTION END:

|

||||

;;;;

|

||||

run;

|

||||

|

||||

/*

|

||||

Prepare Package License

|

||||

*/

|

||||

data _null_;

|

||||

file "&dirOut./license.sas";

|

||||

infile "&dir/LICENSE";

|

||||

input;

|

||||

put _infile_;

|

||||

run;

|

||||

|

||||

/*

|

||||

Extract Core files into MacroCore Package location

|

||||

*/

|

||||

data members(compress=char);

|

||||

length dref dref2 $ 8 name name2 $ 32 path $ 2048;

|

||||

rc = filename(dref, "&dir.");

|

||||

put dref=;

|

||||

did = dopen(dref);

|

||||

if did then

|

||||

do i = 1 to dnum(did);

|

||||

name = dread(did, i);

|

||||

if name in

|

||||

("base" "ddl" "fcmp" "lua" "meta" "metax" "server" "viya" "xplatform")

|

||||

then do;

|

||||

rc = filename(dref2,catx("/", "&dir.", name));

|

||||

put dref2= name;

|

||||

did2 = dopen(dref2);

|

||||

|

||||

if did2 then

|

||||

do j = 1 to dnum(did2);

|

||||

name2 = dread(did2, j);

|

||||

path = catx("/", "&dir.", name, name2);

|

||||

if "sas" = scan(name2, -1, ".") then output;

|

||||

end;

|

||||

rc = dclose(did2);

|

||||

rc = filename(dref2);

|

||||

end;

|

||||

end;

|

||||

rc = dclose(did);

|

||||

rc = filename(dref);

|

||||

keep name name2 path;

|

||||

run;

|

||||

|

||||

%let temp_options = %sysfunc(getoption(source)) %sysfunc(getoption(notes));

|

||||

options nosource nonotes;

|

||||

data _null_;

|

||||

set members;

|

||||

by name notsorted;

|

||||

|

||||

ord + first.name;

|

||||

|

||||

if first.name then

|

||||

do;

|

||||

call execute('libname _ '

|

||||

!! quote(catx("/", "&dirOut.", put(ord, z3.)!!"_macros"))

|

||||

!! ";"

|

||||

);

|

||||

put @1 "./" ord z3. "_macros/";

|

||||

end;

|

||||

|

||||

put @10 name2;

|

||||

call execute("

|

||||

data _null_;

|

||||

infile " !! quote(strip(path)) !! ";

|

||||

file " !! quote(catx("/", "&dirOut.", put(ord, z3.)!!"_macros", name2)) !!";

|

||||

input;

|

||||

select;

|

||||

when (2 = trigger) put _infile_;

|

||||

when (_infile_ = '/**') do; put '/*** HELP START ***//**'; trigger+1; end;

|

||||

when (_infile_ = '**/') do; put '**//*** HELP END ***/'; trigger+1; end;

|

||||

otherwise put _infile_;

|

||||

end;

|

||||

run;");

|

||||

|

||||

run;

|

||||

options &temp_options.;

|

||||

|

||||

/*

|

||||

Generate SASjsCore Package

|

||||

*/

|

||||

%GeneratePackage(

|

||||

filesLocation=&dirOut

|

||||

)

|

||||

|

||||

/**

|

||||

* apply new version in a github action

|

||||

* 1. create folder

|

||||

* 2. create template yaml

|

||||

* 3. replace version number

|

||||

*/

|

||||

|

||||

%mf_mkdir(&dirout/.github/workflows)

|

||||

|

||||

%let desc=Version &version of sasjs/core is now on SAS PACKAGES :ok_hand:;

|

||||

data _null_;

|

||||

file "&dirout/.github/workflows/release.yml";

|

||||

put "name: SASjs Core Package Publish Tag";

|

||||

put "on:";

|

||||

put " push:";

|

||||

put " branches:";

|

||||

put " - main";

|

||||

put "jobs:";

|

||||

put " update:";

|

||||

put " runs-on: ubuntu-latest";

|

||||

put " steps:";

|

||||

put " - uses: actions/checkout@master";

|

||||

put " - name: Make Release";

|

||||

put " uses: alice-biometrics/release-creator/@v1.0.5";

|

||||

put " with:";

|

||||

put " github_token: ${{ secrets.GH_TOKEN }}";

|

||||

put " branch: main";

|

||||

put " draft: false";

|

||||

put " version: &version";

|

||||

put " description: '&desc'";

|

||||

run;

|

||||

|

||||

|

||||

/**

|

||||

* Add, Commit & Push!

|

||||

*/

|

||||

%mp_gitstatus(&dirout,outds=work.gitstatus,mdebug=1)

|

||||

%mp_gitadd(&dirout,inds=work.gitstatus,mdebug=1)

|

||||

|

||||

data _null_;

|

||||

rc=gitfn_commit("&dirout"

|

||||

,"HEAD","&sysuserid","sasjs@core"

|

||||

,"FEAT: Releasing &version"

|

||||

);

|

||||

put rc=;

|

||||

rc=git_push(

|

||||

"&dirout"

|

||||

,"git"

|

||||

,""

|

||||

,"/home/sasjssrv/.ssh/id_ecdsa.pub"

|

||||

,"/home/sasjssrv/.ssh/id_ecdsa"

|

||||

);

|

||||

run;

|

||||

|

||||

|

||||

|

||||

|

||||

26

tests/base/mp_dictionary.test.sas

Normal file

26

tests/base/mp_dictionary.test.sas

Normal file

@@ -0,0 +1,26 @@

|

||||

/**

|

||||

@file

|

||||

@brief Testing mp_dictionary.sas macro

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_dictionary.sas

|

||||

@li mp_assert.sas

|

||||

|

||||

**/

|

||||

|

||||

libname test (work);

|

||||

%mp_dictionary(lib=test)

|

||||

|

||||

proc sql;

|

||||

create table work.compare1 as select * from test.styles;

|

||||

create table work.compare2 as select * from dictionary.styles;

|

||||

|

||||

proc compare base=compare1 compare=compare2;

|

||||

run;

|

||||

%put _all_;

|

||||

|

||||

%mp_assert(

|

||||

iftrue=(%mf_existds(&sysinfo)=0),

|

||||

desc=Compare was exact,

|

||||

outds=work.test_results

|

||||

)

|

||||

53

tests/base/mp_gitadd.test.sas

Normal file

53

tests/base/mp_gitadd.test.sas

Normal file

@@ -0,0 +1,53 @@

|

||||

/**

|

||||

@file

|

||||

@brief Testing mp_gitadd.sas macro

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mf_deletefile.sas

|

||||

@li mf_writefile.sas

|

||||

@li mp_gitadd.sas

|

||||

@li mp_gitstatus.sas

|

||||

@li mp_assert.sas

|

||||

|

||||

**/

|

||||

|

||||

/* clone the source repo */

|

||||

%let dir = %sysfunc(pathname(work))/core;

|

||||

%put source clone rc=%sysfunc(GITFN_CLONE(https://github.com/sasjs/core,&dir));

|

||||

|

||||

/* add a file */

|

||||

%mf_writefile(&dir/somefile.txt,l1=some content)

|

||||

/* change a file */

|

||||

%mf_writefile(&dir/readme.md,l1=new readme)

|

||||

/* delete a file */

|

||||

%mf_deletefile(&dir/package.json)

|

||||

|

||||

/* Run git status */

|

||||

%mp_gitstatus(&dir,outds=work.gitstatus)

|

||||

|

||||

%let test1=0;

|

||||

proc sql noprint;

|

||||

select count(*) into: test1 from work.gitstatus where staged='FALSE';

|

||||

|

||||

/* should be three unstaged changes now */

|

||||

%mp_assert(

|

||||

iftrue=(&test1=3),

|

||||

desc=3 changes are ready to add,

|

||||

outds=work.test_results

|

||||

)

|

||||

|

||||

/* add them */

|

||||

%mp_gitadd(&dir,inds=work.gitstatus,mdebug=&sasjs_mdebug)

|

||||

|

||||

/* check status */

|

||||

%mp_gitstatus(&dir,outds=work.gitstatus2)

|

||||

%let test2=0;

|

||||

proc sql noprint;

|

||||

select count(*) into: test2 from work.gitstatus2 where staged='TRUE';

|

||||

|

||||

/* should be three staged changes now */

|

||||

%mp_assert(

|

||||

iftrue=(&test2=3),

|

||||

desc=3 changes were added,

|

||||

outds=work.test_results

|

||||

)

|

||||

30

tests/base/mp_gitreleaseinfo.test.sas

Normal file

30

tests/base/mp_gitreleaseinfo.test.sas

Normal file

@@ -0,0 +1,30 @@

|

||||

/**

|

||||

@file

|

||||

@brief Testing mp_gitreleaseinfo.sas macro

|

||||

|

||||

<h4> SAS Macros </h4>

|

||||

@li mp_gitreleaseinfo.sas

|

||||

@li mp_assert.sas

|

||||